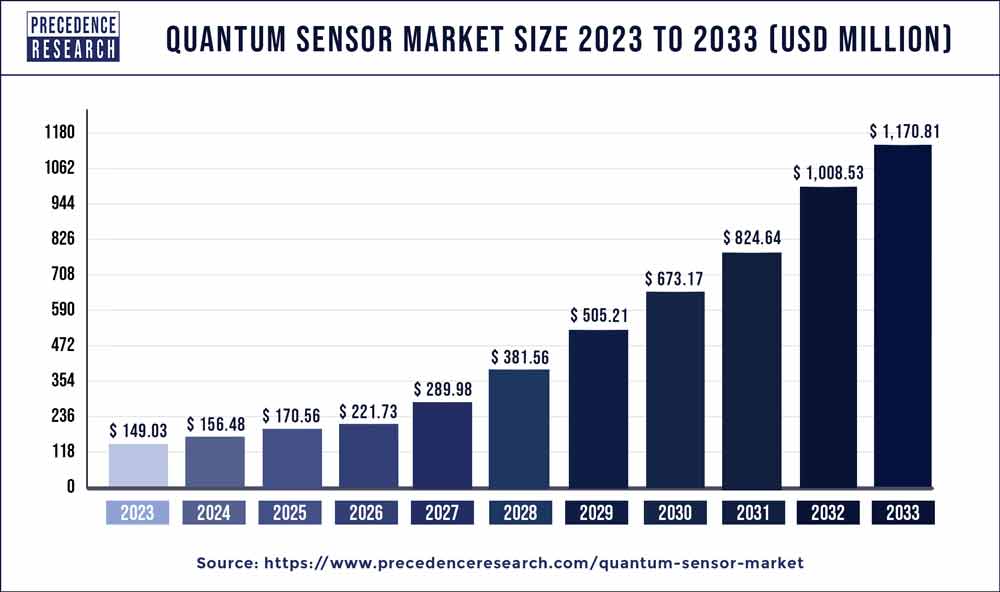

The global high-bandwidth memory (HBM) market is accelerating rapidly, projected to reach USD 59.16 billion by 2034 from USD 7.27 billion in 2025, expanding at a compound annual growth rate (CAGR) of 26.23%. This surge is driven by the escalating demand for ultra-fast, energy-efficient memory solutions critical for artificial intelligence (AI), high-performance computing (HPC), and graphics processing applications.

Why is High-Bandwidth Memory Market Growing So Fast?

High-bandwidth memory technologies represent a revolutionary leap over traditional DRAM, by vertically stacking memory layers with through-silicon vias (TSVs), enabling massively increased data transfer rates combined with lower power consumption.

This makes HBM essential for applications requiring intense data throughput such as AI model training, gaming GPUs, and machine learning workloads. The ongoing innovation in HBM, notably the advent of HBM3, promises to sustain robust market growth through 2034 at a CAGR of 26.23%, bolstered by the booming AI and HPC sectors.

High-Bandwidth Memory Market Key Points

-

The market size is USD 7.27 billion in 2025 and forecasted to grow to USD 59.16 billion by 2034.

-

Asia Pacific is the dominant market region by valuation, with USD 3.27 billion in 2025 growing at 26.38% CAGR.

-

North America is the fastest-growing regional market, driven by hyperscale cloud demand and AI accelerator advances.

-

GPUs hold a 40% market share, leading HBM adoption for graphics and heterogeneous computing.

-

Semiconductor industry demands about 60% of the total HBM market volume.

-

HBM2 dominates currently with a 50% share, while HBM3 is fastest growing due to ultra-high speed and efficiency.

Market Revenue Breakdown

| Year | Market Size (USD Billion) |

|---|---|

| 2025 | 7.27 |

| 2026 | 9.18 |

| 2034 | 59.16 |

The Asia Pacific market alone is projected to grow from USD 3.27 billion in 2025 to around USD 26.92 billion by 2034, maintaining a CAGR of 26.38%.

Get this report to explore global market size, share, CAGR, and trends, featuring detailed segmental analysis and an insightful competitive landscape overview @ https://www.precedenceresearch.com/sample/6977

AI’s Role in the High-Bandwidth Memory Market

Artificial intelligence is a prime driver accelerating the adoption of HBM. Increasing complexity of AI models has exposed the limitations of conventional memory, necessitating high-speed, low-latency memory systems like HBM that significantly improve training speeds and energy efficiency. High-bandwidth memory enables seamless data flow across neural network layers and supports the massive datasets AI requires today, positioning it as a foundational element in AI chip design from cloud-scale deployments to edge devices.

Furthermore, investments in generative AI, deep learning, and edge inference strengthen HBM demand. Chipmakers are integrating advanced memory controllers to optimize throughput while minimizing bottlenecks in AI workloads, making HBM indispensable for achieving computational supremacy in next-generation AI accelerators and heterogeneous computing platforms.

What Factors are Driving Market Growth?

Key growth drivers include:

-

Increasing demand for advanced computing performance in AI, machine learning, and HPC.

-

Expanding graphics-intensive applications such as gaming, 3D rendering, and visualization.

-

Innovations in 3D-stacked DRAM architecture and interposer technologies that enhance bandwidth while reducing power use.

-

Growing semiconductor industry investment in integrating HBM into multi-chip modules and system-on-chip platforms.

-

Rising adoption in emerging sectors such as automotive for AI-based driver assistance and real-time processing.

What Opportunities and Trends Are Emerging in High-Bandwidth Memory?

How is HBM expanding beyond traditional markets?

The automotive sector is rapidly embracing HBM for electric and autonomous vehicles that require fast processing of sensor data for real-time decision-making. Also, new modular HBM architectures and cost reduction efforts are opening adoption in mid-tier AI accelerators and edge computing devices.

What technological trends are shaping future HBM development?

The ongoing advance of HBM3 technology with data transfer speeds exceeding 800 GB/s per stack is reshaping the market. Additive cooling solutions and co-designed memory-compute packaging architectures are enhancing performance and enabling highly dense memory stacks.

Segment Insights:

Why Are GPUs Dominating the High-Bandwidth Memory Market?

Graphics Processing Units (GPUs) hold a dominant 40% share of the high-bandwidth memory (HBM) market. Their synergy with HBM has revolutionized computational efficiency, enabling faster rendering and increased throughput essential for gaming, 3D design, and high-performance computing. The growing demand for immersive visuals, ray tracing, and real-time simulations makes HBM indispensable in GPU architecture.

The evolution of GPUs toward heterogeneous computing further expands HBM’s role beyond graphics. Multi-die packaging and 3D stacking bring memory closer to the GPU cores, dramatically improving data throughput. The rise of GPU-powered data centers, especially for cloud gaming and visualization, cements HBM’s role as mission-critical. Manufacturers are focusing on energy efficiency, ensuring GPUs with HBM deliver superior performance per watt, solidifying GPUs as HBM’s stronghold.

Application Insights: AI & Machine Learning Driving Growth

Artificial intelligence and machine learning represent the fastest-growing applications in the HBM market. As AI models grow more complex, traditional DRAM falls short, making high-bandwidth, low-latency memory essential. HBM accelerates model training, reduces energy use, and facilitates smooth data transfers across neural networks, critical for AI infrastructure.

Rising investments in generative AI, edge computing, and deep learning boost HBM adoption. Chipmakers integrate advanced memory controllers to maximize throughput and minimize bottlenecks. Specialized AI accelerators, including custom tensor cores and neuromorphic chips, depend heavily on HBM, making it vital for computational supremacy and innovation.

Memory Insights: Why Is HBM2 Leading the Market?

HBM2 dominates with a 50% market share due to its optimal balance of performance, capacity, and cost. It introduced vertically stacked DRAM dies connected via TSVs, dramatically boosting bandwidth and reducing power consumption. This architecture became the go-to standard for GPUs, FPGAs, and AI training systems.

HBM2’s widespread adoption stems from its strong performance-to-cost ratio and commercial reliability. Enhanced manufacturing yields and integration have lowered costs, broadening its use. While HBM3 is the future with higher speeds and efficiencies, HBM2 underpins most current high-performance hardware, bridging today’s systems with next-gen tech.

The Rise of HBM3

HBM3 is the fastest-growing variant, boasting bandwidths above 800 GB/s per stack. It delivers a massive increase in memory throughput and energy efficiency, ideal for AI, HPC, and exascale computing. Thermal and signaling optimizations allow HBM3 to perform reliably in data-intensive environments.

HBM3’s compatibility with advanced chiplet architectures and 2.5D/3D packaging aligns with trends toward compact, high-performance systems. Although it remains costly, its adoption in AI supercomputers, quantum simulations, and autonomous computing signals its eventual dominance.

End-User Industry Insights: Semiconductors Lead Demand

The semiconductor industry accounts for about 60% of HBM demand. As chip designs shift to parallel and heterogeneous integration, HBM becomes essential for performance. Semiconductor companies embed HBM in processors, GPUs, and AI accelerators to power next-gen computing.

Manufacturers co-engineer HBM with their chipsets, improving energy efficiency and thermal stability. With transistor scaling limits, advanced memory innovations like HBM are crucial. Packaging advances further increase HBM’s role in semiconductor market growth.

Emerging Automotive Adoption

The automotive sector is the fastest-growing HBM adopter, propelled by vehicle electrification and digitalization. Advanced driver-assistance systems (ADAS), in-car AI, and sensor fusion require massive bandwidth for real-time decisions. HBM enables faster data processing from radar, lidar, and cameras, critical for autonomous driving.

OEMs and Tier 1 suppliers are partnering with chipmakers to integrate HBM-enabled processors. HBM’s low latency and high efficiency suit harsh automotive environments and centralized vehicle architectures. This convergence of AI, connectivity, and sustainability secures HBM’s future in automotive intelligence.

Regional Insights: Asia Pacific’s Dominance

Asia Pacific dominates the HBM market, valued at USD 3.27 billion in 2025 and expected to reach USD 26.92 billion by 2034 with a CAGR of 26.38%. The region’s strength lies in its robust semiconductor manufacturing, agile OSAT ecosystem, and huge investments in advanced packaging.

Local foundries and assembly houses are rapidly increasing wafer-level stacking and interposer production. The growing presence of AI hardware startups boosts local adoption and co-design innovation. Cost advantages and coordinated industrial policies make Asia Pacific a strategic hub for HBM manufacturing and R&D.

China’s Growing Role

China is a major focus for HBM capacity growth with expanding semiconductor manufacturing and vertical integration. Investments in OSAT, interposer fabrication, and domestic DRAM aim to reduce reliance on external suppliers. Strong cloud provider and AI hardware demand position China as a key consumer and producer, pending technological advancement and ecosystem development.

North America’s Rapid Growth

North America is the fastest-growing region due to demand from hyperscale cloud providers, AI accelerator developers, and GPU companies. The region excels in system integration and design for high-bandwidth memory systems essential for advanced AI platforms.

Early access to roadmap products via strategic partnerships and high data center demand drive investments in advanced packaging. India leads within the region in design and engineering expertise, though manufacturing often happens elsewhere, with supply agreements supporting local capabilities.

High-Bandwidth Memory Market Companies

- SK Hynix Inc.: SK Hynix pioneered High-Bandwidth Memory technology and remains the dominant supplier of HBM3, powering AI and HPC systems worldwide. Its HBM3E chips are widely integrated into NVIDIA and AMD GPUs, with ongoing innovation focused on next-gen HBM4 and 3D-stacked architectures to enhance data throughput and energy efficiency.

- Samsung Electronics Co., Ltd.: Samsung is a major HBM producer, supplying HBM2E (Flashbolt) and HBM3 (Icebolt) memory for high-performance GPUs and AI accelerators. With deep expertise in DRAM fabrication and advanced 3D packaging, Samsung continues investing in HBM technologies for exascale computing and AI workloads.

- Micron Technology, Inc.: Micron is developing next-generation HBM and GDDR7 memory technologies for AI, machine learning, and high-performance graphics systems. Its HBM3 Gen2 development roadmap aims to achieve higher bandwidth and power efficiency for data center and supercomputing applications.

- Advanced Micro Devices, Inc. (AMD): AMD integrates HBM memory into its Radeon™ GPUs and Instinct™ data center accelerators to reduce latency and improve energy efficiency. The company’s collaboration with SK Hynix and Samsung has positioned it as a key innovator in GPU-memory integration for AI and HPC systems.

- NVIDIA Corporation: NVIDIA is the largest consumer of HBM memory, utilizing it extensively across its A100, H100, and upcoming Blackwell GPUs. Through close partnerships with SK Hynix, Samsung, and Micron, NVIDIA drives innovation in HBM technology for AI training, inference, and advanced computing.

Challenges and Cost Pressures

Despite its advantages, HBM adoption is constrained by high costs due to complex 3D stacking, expensive interposer materials, and strict quality testing. Limited manufacturing capacity and yield sensitivity raise production costs, while thermal management at high stack densities necessitates costly cooling solutions. Supply concentration also introduces geopolitical risks.

Segments Covered in the Report

By Application

- Graphics Processing Units (GPUs)

- High-Performance Computing (HPC)

- Artificial Intelligence (AI) & Machine Learning (ML)

- Networking & Data Centers

- Automotive (ADAS & Autonomous Driving)

- Consumer Electronics (Gaming Consoles, VR/AR)

- Others (e.g., Medical Imaging, Aerospace)

By Memory Type

- HBM1

- HBM2

- HBM2E

- HBM3

- HBM4

By End-User Industry

- Semiconductors

- Automotive

- Healthcare

- Telecommunications

- Consumer Electronics

- Others

By Region

- North America

- Europe

- Asia-Pacific

- Latin America

- Middle East & Africa

Read Also: Digital Out-Of-Home Advertising Market

You can place an order or ask any questions. Please feel free to contact us at sales@precedenceresearch.com |+1 804 441 9344